Reinforcement learning for asset allocation has become a popular tool for solving difficult problems in the field of asset allocation. With RL, one can build models that can continuously learn and adapt to changes in the environment, making it ideal for use in fast-paced and unpredictable financial markets. This tutorial will provide an overview of how RL can be used to create strategies for asset allocation and provide concrete code snippets for forming investment actions.

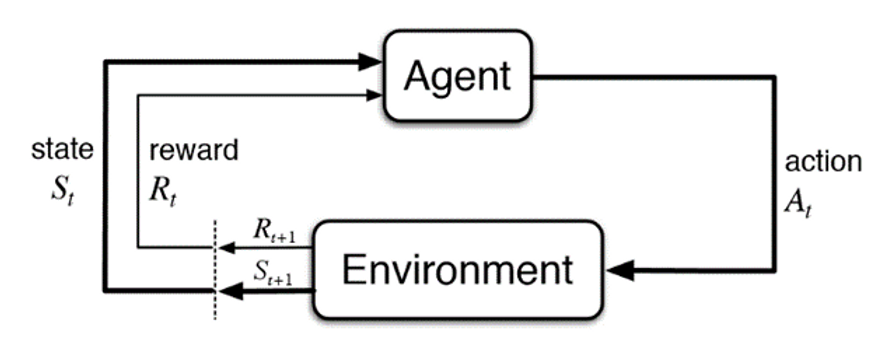

Reinforcement learning for asset allocation is a type of machine learning where an agent learns how to behave in an environment by performing certain actions and receiving feedback in the form of rewards or penalties. This approach can be used to optimize asset allocation in investment portfolios.

At a high level, the impact of asset allocation in investment is the process of allocating a portion of an investor’s portfolio to various asset classes to maximize their portfolio’s potential for gains. By utilizing RL, investors can create models that can continuously learn from their past investment decisions and adapt to changing market conditions.

KEY TAKEAWAYS:

- Deep Reinforcement Learning (DRL) is a framework that combines deep reinforcement learning for asset allocation to make decisions in complex environments.

- Asset allocation refers to the process of distributing investment funds across different assets to optimize returns and manage risk.

- reinforcement learning for asset allocation involves using deep neural networks as function approximators to learn optimal investment strategies.

- DRL models learn from historical data and use a reward signal to guide their decision-making process.

- The state in reinforcement learning for asset allocation can include market data, economic indicators, and other relevant factors.

- Actions in DRL for asset allocation involve determining the allocation percentages for different assets.

- The reward signal in DRL for asset allocation is based on investment performance, such as portfolio returns or risk-adjusted metrics.

- DRL models employ techniques like Q-learning or policy gradient methods to update their neural network parameters and improve decision-making.

- DRL for asset allocation has shown promise in improving portfolio performance and adapting to changing market conditions.

- Challenges in DRL for asset allocation include data quality, model interpretability, and the risk of overfitting historical data.

- Ongoing research focuses on improving DRL models, addressing these challenges, and exploring applications in different investment contexts.

Examples:

- Q-Learning for Portfolio Optimization:

- Q-Learning is used to train a deep neural network agent to make reinforcement learning for asset allocation decisions.

- The agent interacts with an environment representing the financial market, receiving information about asset prices, market trends, and other relevant factors.

- The agent learns to optimize the allocation of assets by estimating the expected cumulative rewards associated with different actions (e.g., buying, selling, or holding specific assets) using the Q-learning algorithm.

- The deep neural network approximates the Q-values, allowing the agent to handle large state and action spaces efficiently.

- Policy Gradient for Portfolio Management:

- Policy gradient methods, such as Proximal Policy Optimization (PPO) or Trust Region Policy Optimization (TRPO), are used for asset allocation in portfolio management.

- A deep neural network is trained to directly output a portfolio allocation policy given the current market conditions and historical data.

- The neural network policy is updated iteratively through interactions with the financial market, using gradient-based optimization to maximize the expected cumulative returns or achieve other specified objectives.

- The policy gradient approach allows for direct optimization of the allocation strategy and can handle continuous action spaces, making it suitable for complex asset allocation problems

The Goal of Reinforcement Learning for Asset Allocation

The impact of asset allocation in investment is to maximize the return on investment while minimizing the risk. The traditional approach to the role of asset allocation in investment involves a static allocation strategy based on a set of predetermined rules. However, this approach does not consider changes in the market or changes in the investor’s risk tolerance.

- Reinforcement learning can be used to develop a dynamic asset allocation strategy that adjusts based on changes in the market and the investor’s risk tolerance. The agent learns by interacting with the environment, which in this case is the stock market.

- The agent takes actions based on the current state of the market and receives feedback in the form of rewards or penalties based on the performance of its actions.

How to Create a Modal of Reinforcement Learning for Asset Allocation?

The first step in performing the impact of asset allocation in investment using RL is to create a model that can represent the investor’s portfolio. It is a deep-learning book. This model usually consists of three components:

- A state space

- An action space

- A reward function.

- The state space includes variables such as asset prices, risk factors, and investor sentiment. The action space consists of the different investment actions that can be taken, such as buying, selling, or holding. Finally, the reward function determines the investor’s reward for making certain investment decisions.

How to Train a Reinforcement Learning Model for Asset Allocation?

Once the model is created, the next step is to create a learning algorithm to teach it. In the case of RL, this learning algorithm is called the reinforcement learning (RL) agent. The RL agent will use the model to make decisions regarding the best asset allocation decisions.

The reinforcement learning for asset allocation agents will utilize techniques such as Q-learning, SARSA, and DQN to learn which allocations will maximize the investor’s returns.

- Finally, once the RL agent is trained and the best allocation decisions are determined, it is time to form investment actions. This can be done by applying the RL agent’s decisions to the investor’s portfolio.

- For example, if the RL agent determines that a certain stock should be bought, the investor can buy the desired amount of the stock.

Similarly, if the reinforcement learning for the asset allocation agent determines that a certain stock should be sold, the investor can sell the desired amount of the stock.

How to use reinforcement learning for asset allocation?

Portfolio Optimization Using Reinforcement Learning

Reinforcement learning (RL) can be a powerful technique for reinforcement learning for asset allocation, enabling the optimization of investment strategies based on real-time feedback. Here’s a general approach to using RL for asset allocation:

- Define the problem: Determine the specific objective of your reinforcement learning for asset allocation. For example, you may want to maximize portfolio returns, minimize risk, or achieve a specific risk-return trade-off.

- State and action space: Define the state and action spaces for your RL model. The state space represents the information available to the agent at a given time, such as historical prices, economic indicators, or other relevant data. The action space comprises the possible investment decisions, such as allocating percentages of the portfolio to different assets or asset classes.

- Reward function: Design a reward function that quantifies the performance of the investment strategy. The reward function can be based on various factors, such as portfolio returns, risk-adjusted returns, or even specific financial metrics like the Sharpe ratio. The reward function should align with your defined objective.

- Model selection: Choose an RL algorithm suitable for your asset allocation problem. Popular algorithms include Q-learning, Deep Q-Networks (DQN), Proximal Policy Optimization (PPO), or reinforcement. Consider the complexity of your problem, the size of the state space, and the availability of historical data when selecting a model.

- Data preparation: Collect and preprocess relevant data for training and testing your RL model. This may include historical price data, fundamental data, economic indicators, or any other data you consider useful for making investment decisions. Standardize or normalize the data as required.

- Training: Train your RL model using historical data. The model learns to select appropriate actions in different states by maximizing the cumulative rewards received over time. This process involves iteratively adjusting the model’s parameters based on the feedback received from the environment.

- Evaluation and optimization: Evaluate the performance of your RL model using out-of-sample data or a simulated trading environment. Measure key metrics like portfolio returns, risk metrics, or other relevant performance indicators. If necessary, refine and optimize your model by adjusting hyperparameters, exploring different reward functions, or experimenting with different architectures.

- Execution: Once you are satisfied with your RL model’s performance, you can deploy it in a live trading environment. Continuously monitor and update your model as new data becomes available to ensure its effectiveness over time.

- Risk management: Implement appropriate risk management techniques alongside your RL-based asset allocation strategy. Consider incorporating stop-loss orders, position-sizing rules, or other risk control measures to protect your portfolio from significant losses.

It’s important to note that RL-based reinforcement learning for asset allocation strategies involves inherent risks, and careful consideration should be given to factors like data quality, model assumptions, and the dynamic nature of financial markets. Additionally, it’s recommended to consult with domain experts and professionals before deploying RL models for real-world investment decisions.

Python Code for Reinforcement Learning

The below python code provides an example of the Q-learning RL model and trained to allocate among 4 stocks.

import numpy as np

import pandas as pd

import yfinance as yf

# Download historical data for the assets

tickers = ['AAPL', 'GOOG', 'TSLA', 'MSFT']

start_date = '2016-01-01'

end_date = '2022-04-12'

data = yf.download(tickers, start=start_date, end=end_date)['Adj Close']

# Define hyperparameters

gamma = 0.95 # discount factor

alpha = 0.1 # learning rate

epsilon = 0.1 # exploration rate

num_episodes = 1000

# Initialize Q-table with zeros

num_assets = len(tickers)

num_actions = 11 # allocate between 0-100% in 10% increments

q_table = np.zeros((num_assets, num_actions))

# Define helper function to discretize state

def discretize_state(state):

state_min = np.min(state)

state_max = np.max(state)

state_range = state_max - state_min

return int(np.round((state - state_min) / state_range * (num_actions - 1)))

# Define main reinforcement learning loop

for episode in range(num_episodes):

# Reset environment to initial state

state = data.iloc[0]

done = False

# Loop over timesteps in episode

while not done:

# Choose action using epsilon-greedy policy

if np.random.uniform() < epsilon:

action = np.random.randint(num_actions)

else:

state_discrete = discretize_state(state)

action = np.argmax(q_table[state_discrete])

# Apply action and observe next state and reward

allocation = action * 0.1

returns = np.log(data.pct_change() + 1)

portfolio_returns = (returns * allocation).sum(axis=1)

reward = portfolio_returns.iloc[-1]

next_state = data.iloc[-1]

# Update Q-table using Q-learning update rule

state_discrete = discretize_state(state)

next_state_discrete = discretize_state(next_state)

q_table[state_discrete, action] += alpha * (reward + gamma * np.max(q_table[next_state_discrete]) - q_table[state_discrete, action])

# Transition to next state

state = next_state

# Check if episode is done

if portfolio_returns.index[-1] == data.index[-1]:

done = True

# Print progress

if (episode + 1) % 100 == 0:

print(f'Episode {episode + 1}/{num_episodes}')

# Choose final policy based on learned Q-table

final_allocation = np.zeros(num_assets)

for i in range(num_assets):

state = data.iloc[0, i]

state_discrete = discretize_state(state)

final_allocation[i] = np.argmax(q_table[state_discrete]) * 0.1

print(f'Final asset allocation: {tickers} -> {final_allocation}')Portfolio Optimization Using Reinforcement Learning

Portfolio optimization is a classic problem in finance that involves constructing an optimal investment portfolio to maximize returns while minimizing risk. Traditionally, portfolio optimization has been approached using mathematical optimization techniques such as mean-variance optimization, which requires assumptions about the distribution of asset returns. However, with the advancements in machine learning, specifically reinforcement learning, it is possible to tackle portfolio optimization in a data-driven and model-free manner.

Reinforcement learning (RL) is a branch of machine learning that deals with sequential decision-making problems. In the context of portfolio optimization, RL can be used to learn a policy that determines how to allocate funds across a set of reinforcement learning for asset allocation.

Summary

This tutorial has provided an overview of how RL can be used to create strategies for asset allocation and provided concrete code snippets for forming investment actions.

As reinforcement learning for asset allocation becomes more popular and sophisticated, it is clear that this powerful tool will become even more important in helping investors optimize their allocation decisions.

Reinforcement Learning for asset allocation can provide several advantages for asset allocation in 2023:

- Adaptability: RL algorithms can adapt to changing market conditions and adjust the portfolio allocation accordingly. As the financial markets are constantly evolving and influenced by various macro and microeconomic factors, an adaptive approach can help to achieve better returns and minimize risk.

- Personalization: RL can help to personalize the portfolio allocation based on individual preferences and constraints. This can include factors such as risk tolerance, investment horizon, and liquidity needs.

- Speed: RL algorithms can analyze vast amounts of financial data in real time and make investment decisions quickly. This can help to exploit market inefficiencies and identify investment opportunities before others.

- Data-driven: RL algorithms are data-driven, which means they can learn from past experiences and improve their decision-making ability over time. This can help to reduce the impact of emotions and biases on investment decisions.

- Optimization: RL algorithms can optimize portfolio allocation to achieve specific goals, such as maximizing returns or minimizing risk. This can help to achieve better outcomes than traditional asset allocation approaches.

Overall, Reinforcement learning for asset allocation can provide a powerful tool in 2023, helping investors to achieve better returns and manage risk in an increasingly complex financial landscape.